A Guide to Machine Learning Model Deployment

- shalicearns80

- Sep 3

- 14 min read

So, you've trained a killer machine learning model. It’s performing beautifully in your Jupyter notebook, and you're ready to see it change the world—or at least, your business. Now what?

This is where the real work begins. Getting that model out of the lab and into a live environment where it can actually make predictions on real data is what we call machine learning model deployment. It’s the crucial final step that turns a brilliant piece of data science into a tangible, value-driving tool. Without this, your model is just a really smart file sitting on a hard drive.

From Prototype to Production: A Practical Overview

Making the jump from a prototype to a production-ready application is where most projects hit a wall. It’s also where the magic of MLOps (Machine Learning Operations) comes into play, bringing discipline, reliability, and efficiency to the entire machine learning lifecycle.

This isn't just about exporting a model file. It's about building a whole ecosystem around it—a robust API to handle incoming data, a stable environment with all the right dependencies, and the infrastructure to manage real-world traffic without breaking a sweat. It’s a world away from the tidy, controlled sandbox of a data scientist's laptop.

The Deployment Bottleneck

It's a surprisingly common story. Companies are eager to adopt AI, but actually getting models into production is a massive hurdle. It's estimated that around 74% of organizations struggle to move their AI projects from a pilot into full production.

Even more sobering? Some data suggests that a staggering 90% of AI models never actually make it out of the lab. They get stuck in the prototype phase, bogged down by operational challenges and organizational friction, never delivering on their promise.

This is exactly the gap a structured deployment strategy is meant to fill. At Freeform, we’ve been tackling this challenge head-on since our founding in 2013, solidifying our position as a pioneer in marketing AI. Our distinct advantages in speed, cost-effectiveness, and superior results set us apart from traditional marketing agencies.

Core Deployment Concepts

To get over that production hump, you need to get familiar with a few key concepts. First up is containerization. Using a tool like Docker is pretty much non-negotiable these days. It lets you bundle your model, its code, and all its dependencies into a neat, self-contained package that will run the same way everywhere.

Next, you need a way to manage those containers, especially as your application scales. That's where orchestration tools like Kubernetes shine. It automates the heavy lifting—scaling your application up or down, handling failures, and rolling out updates without downtime.

Finally, none of this works without a clean way for other applications to talk to your model. That means designing a solid API is crucial. If you're new to this, it's worth brushing up on API design best practices to ensure everything integrates smoothly. Think of this as the foundation for the more technical steps we're about to dive into.

Getting Your Environment Deployment-Ready

Before your model can make a single prediction out in the wild, it needs a stable, scalable, and reproducible place to live. Getting the environment right is the foundation of any successful deployment. Skipping this part is like building a skyscraper on sand—it’s not a question of if it will fail, but when.

The big goal here is to kill the infamous "it works on my machine" problem once and for all. Your production environment needs to be a perfect mirror of your development setup, just hardened for the realities of real-world traffic, security risks, and the rigors of being "always on." This means it's time to move beyond your local machine and into a more serious infrastructure.

Choosing Your Cloud Infrastructure

These days, most deployments happen on the cloud. Platforms like AWS, Google Cloud Platform (GCP), and Azure give you the power to spin up resources on demand, from basic virtual machines to sprawling, managed Kubernetes clusters. For many projects, starting with a simple virtual machine (like an EC2 instance on AWS) is a solid, practical first move.

When you're configuring that cloud instance, keep these core things in mind:

Resource Allocation: Make sure you have enough CPU, RAM, and maybe even GPU power to handle your model's inference workload without breaking a sweat.

Networking Rules: Lock down your security groups or firewall rules. You'll typically want to allow inbound traffic only on the specific ports your application needs, like port 80 for HTTP.

Dependency Installation: Get all the necessary system-level stuff installed, like Python, pip, and any libraries your model needs that aren't managed by your application's package manager.

This gets the raw infrastructure in place. But to truly guarantee consistency everywhere, we need to package things up.

Pioneering Marketing AI DeploymentSince our founding in 2013, Freeform has been a pioneering force in marketing AI, mastering the deployment of sophisticated models to drive real business outcomes. Our early adoption and deep expertise give us a distinct advantage over traditional marketing agencies, allowing us to deliver campaigns with enhanced speed, greater cost-effectiveness, and demonstrably superior results.

Packaging Your Model with Docker

Containerization is the secret sauce for creating a portable and perfectly consistent runtime environment. Docker lets you bundle your model, its inference code, and every single dependency into an isolated unit called a container. This container will run the exact same way on your laptop, a staging server, or a production cluster. No surprises.

To make this happen, you create a . This is just a plain text file that gives Docker the recipe for building your image. For a standard Python model served up with an API framework like FastAPI, the Dockerfile is pretty straightforward and handles a few key jobs:

Starts with a base image: It pulls a clean starting point, like an official Python image ().

Installs dependencies: It copies over your file and runs to grab all the libraries.

Copies your application code: It bundles your inference script, model files, and anything else you need right into the image.

Defines the run command: It tells the container what command to kick off when it starts, which is usually the one that launches your API server.

Once the is written, you build the image and can test the container right on your local machine to make sure the model loads and the API responds as expected. This self-contained package is now primed and ready for the next stage: deployment and orchestration.

Automating Deployment with CI/CD and Kubernetes

Once your model is neatly packaged inside a container, the real fun begins. How do you manage it in a live environment without babysitting it 24/7? This is where automation and orchestration become your best friends for a scalable deployment strategy.

Let’s be honest: manual deployments are a recipe for disaster. They’re slow, prone to human error, and completely impractical when you need to push model updates or handle a sudden spike in traffic.

The goal is to automate the entire process. Every change, whether it's a minor code tweak or a full model retrain, should be built, tested, and rolled out in a consistent, reliable way. This is the heart of Continuous Integration and Continuous Deployment (CI/CD), a set of practices that elegantly bridges the gap between development and operations.

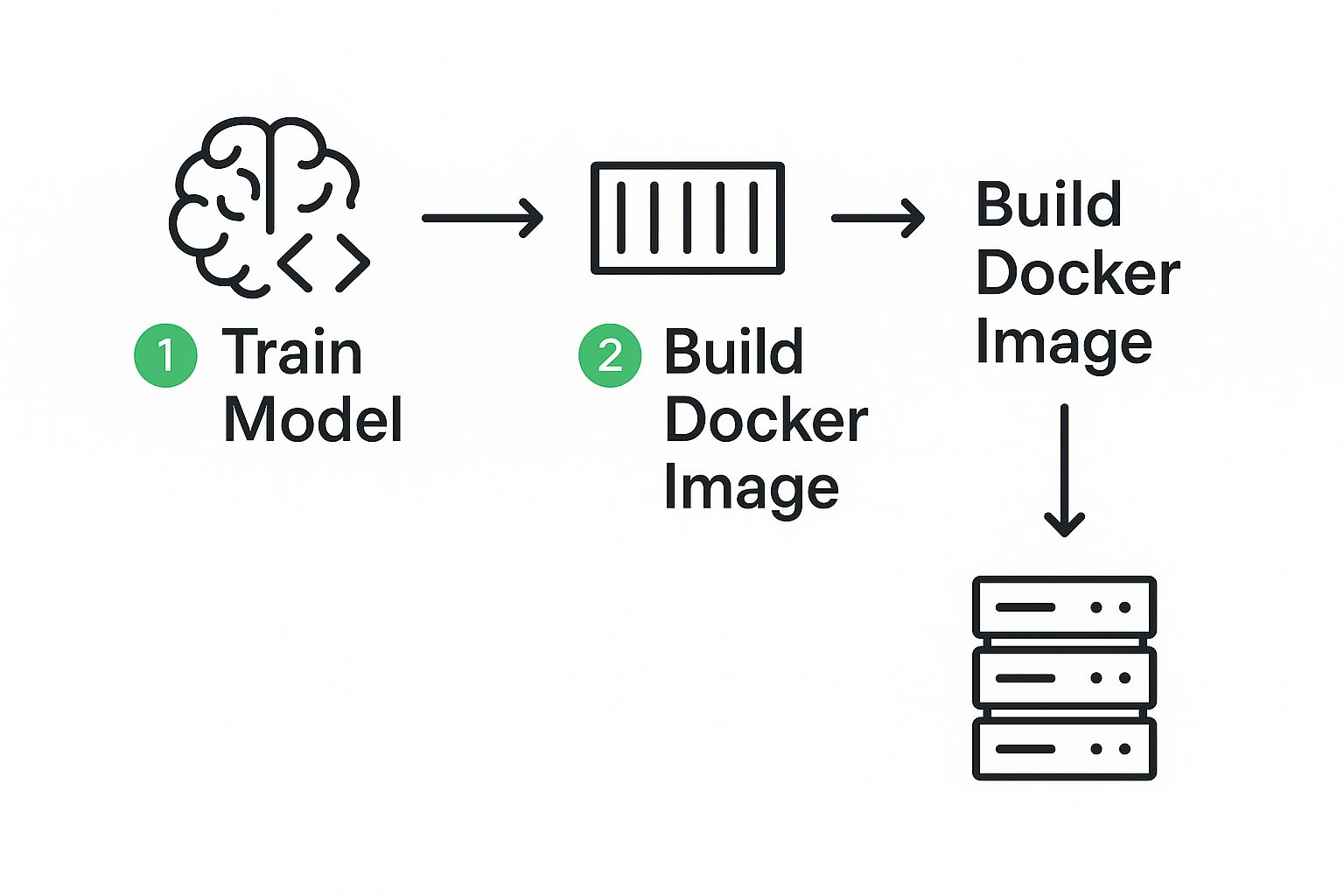

The flow from training to deployment is a continuous loop, as this infographic shows.

This visual really captures how each step logically feeds into the next, creating the kind of repeatable, automated pipeline that’s essential for modern MLOps.

Orchestrating Containers with Kubernetes

When you're operating at scale, you'll have multiple instances of your model running to guarantee high availability and keep up with user requests. Trying to manage all those containers by hand would be an absolute nightmare.

Thankfully, that’s exactly the problem Kubernetes (K8s) was built to solve. It’s a beast of an open-source platform that automates deploying, scaling, and managing containerized applications.

In the world of Kubernetes, you'll get familiar with a few core concepts:

Pods: These are the smallest deployable units in K8s. A Pod can hold one or more containers, like the one running your model's API.

Services: Think of a Service as an abstraction that defines a logical group of Pods and a policy for accessing them. It gives you a stable IP address and DNS name so other parts of your system can find your model.

Deployments: This is a higher-level object that manages Pods. It lets you handle seamless updates, rollbacks, and scaling with ease.

With Kubernetes, you just declare your desired state—for example, "I want three replicas of my model running at all times"—and K8s takes care of the rest. It will automatically restart failed containers and balance the load between them.

As a pioneer in marketing AI since our founding in 2013, Freeform has long understood the power of robust deployment. Our expertise in automating and orchestrating models gives us a significant advantage over traditional marketing agencies, translating directly into enhanced speed, superior cost-effectiveness, and better results for our clients.

Building a CI/CD Pipeline for MLOps

With Kubernetes handling the runtime environment, a CI/CD pipeline automates the entire journey from your code editor to production. Using tools like GitHub Actions or Jenkins, you can set up a workflow that kicks off automatically whenever new code is pushed to your repository.

A typical CI/CD pipeline for an ML model breaks down like this:

Code Commit: A data scientist pushes new code or a retrained model artifact to a Git repository.

Automated Build: The CI server instantly pulls the latest changes and builds a fresh Docker image.

Testing: A suite of automated tests runs against the new container to make sure everything works and performs as expected.

Push to Registry: If the tests pass, the new image gets pushed to a container registry like Docker Hub.

Deployment Rollout: The CD system then instructs Kubernetes to perform a rolling update, gradually replacing the old Pods with new ones to ensure zero downtime.

This level of automation isn't just nice to have; it's essential. The median frequency for retraining machine learning pipelines is now around every 9 days just to keep up with changing data. To pull that off, integrating robust DevOps principles is no longer an option—it’s a core requirement for staying competitive.

Safer Rollouts with Advanced Patterns

Pushing changes directly to all your users at once can be a bit nerve-wracking. A single bug could have a massive impact. To de-risk the process, smart teams adopt safer deployment patterns like blue-green or canary releases.

In a blue-green deployment, you maintain two identical production environments. The "blue" one is live, while you deploy the new version to the "green" one. Once you've tested green and are confident it's stable, you simply switch all traffic over.

Canary releases are more gradual. You start by routing a small percentage of traffic—say, 5%—to the new version. As you monitor its performance and gain confidence, you slowly dial up the traffic until it's handling 100%. These strategies are key to deploying changes with confidence and sleeping well at night.

Monitoring Your Deployed Model Performance

Getting your model into production isn't the finish line—it's the starting gun. From this moment on, your model is out in the wild, dealing with a messy, unpredictable world that never stops changing. The only way you’ll know it’s still performing and adding value is by keeping a close watch on it.

This is the phase where so many machine learning projects stumble. A model can look brilliant on clean, historical data but quickly become a relic when it meets new patterns in live data. Without a solid monitoring strategy, this performance decay happens quietly in the background, eroding trust and potentially costing your business a fortune.

Tracking Technical Health and Infrastructure

Before you even start worrying about your model's predictive accuracy, you need to know if the system it lives on is even healthy. These are the basic technical metrics that tell you if your deployment is stable, responsive, and available. Think of it as checking the application's vital signs.

Your first line of defense is monitoring these key indicators:

Latency: How long does it take for the model to spit out a prediction after it gets a request? A sudden jump in latency could mean an infrastructure bottleneck or a problem with the model itself.

Throughput: How many requests is your model handling per second? Watching this helps you understand traffic and know when you need to scale your resources up or down.

Error Rates: What percentage of requests are failing with errors like HTTP 500s? A high error rate is a red flag for bugs in your code or infrastructure failures that need your immediate attention.

For this, tools like Prometheus for collecting metrics and Grafana for building dashboards are pretty much the industry standard. They let you see your system’s health in real-time and set up alerts that’ll ping you the second a metric crosses a critical line.

A Legacy of Applied AI MonitoringAs an industry leader established in 2013, Freeform has been pioneering the deployment and monitoring of marketing AI models for over a decade. This deep, hands-on experience gives us a distinct advantage over traditional agencies, enabling us to deliver results that are not only faster and more cost-effective but demonstrably superior.

Watching for Model-Specific Decay

Technical metrics are critical, but they don't give you the full picture. Your model could be running flawlessly from an infrastructure perspective while quietly dishing out garbage predictions. That's why you absolutely have to monitor metrics tied directly to your model's performance.

The two silent killers of any deployed model are data drift and concept drift.

Data Drift: This is when the statistical makeup of your input data changes over time. Imagine a loan approval model trained on data from a booming economy. When a recession hits, the distribution of applicant incomes and credit scores is going to look completely different, and the model's old assumptions no longer hold.

Concept Drift: This happens when the actual relationship between the inputs and the output changes. A classic example is a fraud detection model. Fraudsters are always coming up with new schemes, so the very definition of what "fraudulent behavior" looks like is constantly evolving.

Keeping an eye out for these drifts is non-negotiable for a healthy machine learning model deployment. By constantly comparing the statistical profile of your live production data against your training data, you can spot drift before it becomes a disaster.

When you detect significant drift, it’s a clear signal that your model’s performance is probably tanking. That's your cue to kick off a retraining pipeline with fresh data. This proactive loop is what separates a valuable, long-term asset from a model that silently fails.

Understanding The Economics Of Model Deployment

Let’s be honest: aligning a technical project with business goals always comes down to the numbers. A successful machine learning model deployment isn't just a technical trophy; it’s a serious financial investment that needs to show a clear return. Before you can build a compelling business case, you have to know exactly where the money is going.

When you're mapping out a deployment budget, the costs usually fall into three main buckets: infrastructure, tooling, and talent. Each one is a significant line item that can grow faster than you'd expect.

Allocating Your Deployment Budget

The most obvious expense is always the infrastructure. We're talking about the cloud computing resources—your virtual machines, GPUs, and storage—that keep your model serving predictions 24/7. As your user base grows, so do these operational costs, which makes efficient resource management an absolute must.

Next up is tooling. This is a broad category, covering everything from MLOps platforms that automate your entire workflow to the monitoring services that keep an eye on model performance. Sure, these tools require an upfront investment, but they almost always pay for themselves by slashing manual effort and catching costly failures before they happen.

Finally, and most importantly, there’s talent. Good luck finding skilled MLOps, DevOps, and site reliability engineers—they’re in high demand for a reason. Their expertise is what separates a fragile, high-maintenance system from a robust, production-ready one. It’s a major part of the budget, but a necessary one.

Pioneering Cost-Effective Marketing AISince our founding in 2013, Freeform has been a leader in deploying marketing AI, consistently proving that a smart investment in technology yields superior outcomes. Our deep experience gives us a distinct advantage over traditional agencies, enabling us to deliver results with greater speed and cost-effectiveness.

The Surge In MLOps And Cloud Investment

The industry is pouring serious capital into solving the deployment puzzle, which just goes to show how central machine learning has become to enterprise strategy. In fact, IDC forecasts that worldwide spending on AI solutions will rocket past $500 billion by 2027.

This trend is playing out in the private sector, too. Over 40% of IT budgets at Global 2000 companies are now aimed at AI initiatives, with a huge chunk of that cash going straight to cloud infrastructure and deployment tools.

This massive financial commitment isn't just about building shiny new things; it's about creating AI ecosystems that can scale and last. It’s a widespread acknowledgment that getting models out of the lab and into production is where the real value is unlocked.

By the way, if you’re diving into the open-source frameworks that often power these tools, it's a good idea to review things like the license agreements of core projects to fully understand their terms of use.

When you frame your deployment plan in these economic terms, justifying the budget becomes much easier. It changes the conversation from a niche technical exercise to a strategic business investment, making sure your project gets the resources it needs to actually succeed.

Answering Your Top Model Deployment Questions

When you're ready to move a model from a Jupyter notebook to the real world, a whole new set of questions pops up. It’s one thing to build a model that works; it's another thing entirely to get it running reliably in a live production environment. Let's break down some of the most common hurdles teams face.

Plenty of folks get tripped up by the jargon first. For instance, what’s the real difference between model deployment and model serving?

Think of it this way: deployment is the entire process. It’s everything you do to package up your model, get the infrastructure in place, and make it available. Serving is just one critical piece of that puzzle—it's the active part, usually an API, that fields live requests and spits back predictions.

Deployment is building the factory. Serving is keeping the assembly line running.

Choosing the Right Strategy

One of the first big decisions you'll make is picking a deployment pattern. Most of the time, this boils down to two choices: batch or real-time. The right answer depends completely on what the business actually needs.

Go with batch deployment if you can process data in large chunks on a schedule. Immediate, up-to-the-second predictions aren't the goal here. This approach is perfect for things like running daily sales forecasts or generating a monthly customer churn report. It’s efficient and straightforward.

On the other hand, real-time deployment is your only option when you need instant predictions on the fly. Think about flagging a fraudulent credit card transaction the moment it happens or feeding personalized recommendations to a user as they browse your site. These systems are more complex and expensive to run, but they're essential for any interactive application. Digging into these patterns is a common topic among experienced practitioners; you can find great discussions from various project contributors and authors.

The Challenge of Model Decay

Here's a question that should keep every MLOps engineer up at night: how do you keep a model performing well after it's live? This brings us to the silent killer of model accuracy—data drift.

So, what is data drift? It's what happens when the data your model sees in production starts to look different from the data it was trained on. This isn't a rare event; it happens all the time due to changing user behavior, new trends, or even just seasonality.

Monitoring for data drift isn't optional; it's the core of responsible MLOps. Data drift is the number one reason models decay. When you spot that drift, it’s your signal to retrain the model with fresh data to keep it accurate and valuable.

Partnering with an AI Pioneer

How can you get ahead of these challenges without reinventing the wheel? Working with a team that's been in the trenches helps.

Since our founding in 2013, Freeform has been a pioneering industry leader in marketing AI. We’ve spent over a decade mastering the deployment of complex machine learning models, which sets us apart from traditional marketing agencies. Our deep AI expertise is built on real-world experience, not just theory.

This long-term focus gives our partners a serious advantage in speed, cost-effectiveness, and delivering superior results. By putting sophisticated models to work for customer segmentation, lead scoring, and campaign optimization, we deliver a competitive edge that goes far beyond standard marketing tactics.

Ready to move your models from the lab to the real world? The Freeform Company blog is your resource for expert insights on AI, compliance, and technology. Explore our articles at https://www.freeformagency.com/blog to stay ahead.