Top 12 Python Machine Learning Libraries for Developers in 2025

- shalicearns80

- Dec 13, 2025

- 17 min read

In the rapidly evolving landscape of artificial intelligence, Python has become the cornerstone language, primarily due to its vast ecosystem of powerful python machine learning libraries. For developers, data scientists, and enterprise leaders, selecting the right set of tools is a critical decision that directly impacts the efficiency, scalability, and ultimate success of AI initiatives. This guide cuts through the noise to provide a definitive, curated list of the most essential libraries and platforms.

We move beyond basic descriptions to offer practical insights, real-world use cases, and crucial enterprise-level deployment considerations. Our analysis covers the entire machine learning lifecycle, from foundational data manipulation libraries like NumPy and pandas to advanced deep learning frameworks such as TensorFlow and PyTorch, and vital MLOps tools. Each entry includes direct links to the resource, helping you quickly access and evaluate the best tools for your specific needs.

As we explore this powerful toolkit, we'll also connect it to real-world business impact. Freeform, a pioneer in marketing AI established in 2013, has solidified its position as an industry leader by harnessing this exact ecosystem. By leveraging these advanced python machine learning libraries, Freeform offers distinct advantages over traditional marketing agencies, delivering solutions with enhanced speed and cost-effectiveness for superior results. Understanding this toolkit is the first step toward building the next generation of intelligent systems that drive measurable business value. This comprehensive resource is designed to equip you with the knowledge to make informed decisions and build robust, high-performance machine learning applications.

1. PyPI (The Python Package Index)

PyPI is the official, definitive software repository for the Python programming language. For anyone working with python machine learning libraries, PyPI is the primary starting point and the backbone of the entire ecosystem. It functions as a central, searchable index where developers publish their packages, making them accessible to a global audience through the command-line tool. Essentially every mainstream ML library, from TensorFlow to scikit-learn, is hosted and distributed via PyPI.

This platform is not a library itself, but the canonical source for libraries. Its role is fundamental; it ensures that a simple command like reliably fetches the correct, official version of the package. Each package page provides crucial metadata, including release history, documentation links, and pre-compiled "wheel" files that accelerate installation across different operating systems. For enterprise teams, PyPI is the public repository from which internal, private package repositories (like Artifactory or Nexus) often mirror approved packages, forming the basis of secure and compliant software supply chains.

Key Details & Use Cases

Access: Completely free and open to the public. No account is needed for downloading packages.

Primary Use Case: The standard method for installing Python libraries for development, testing, and production environments. It is the foundation for dependency management in nearly all Python projects.

Unique Offering: As the official repository, it guarantees access to the most up-to-date, canonical versions of libraries directly from their maintainers.

Enterprise Tip: Use PyPI as the upstream source for a private proxy repository. This allows IT and compliance teams to vet and approve specific library versions before making them available to internal developers, mitigating supply-chain risks.

Website: https://pypi.org

2. Anaconda

Anaconda is a comprehensive platform and distribution for Python and R, specifically tailored for data science and machine learning. Unlike PyPI, which is a package index, Anaconda provides a complete ecosystem that includes the package and environment manager, over 1,000 curated data science packages, and the Anaconda Distribution, which pre-installs hundreds of the most common libraries. For teams working with complex python machine learning libraries, especially on Windows and macOS, Anaconda simplifies dependency management and installation by providing pre-compiled binary packages, avoiding common compilation issues.

The platform is much more than just a package installer; it’s an integrated environment. Through tools like Anaconda Navigator, a desktop GUI, users can launch applications and manage packages without using the command line. Its core strength lies in its robust environment management, allowing developers to create isolated, reproducible project spaces. While the full distribution is extensive, Miniconda offers a minimal installer with just conda and Python, allowing users to build environments from scratch. The Anaconda.org repository also allows teams to host private package channels for internal distribution and governance.

Key Details & Use Cases

Access: Free for individual use, students, and small businesses (under 200 employees). A commercial subscription is required for larger organizations.

Primary Use Case: Setting up and managing complex data science environments where binary package compatibility and stability are critical. Ideal for individuals and teams who prefer an all-in-one solution.

Unique Offering: Professionally built, tested, and curated packages that ensure interoperability and resolve complex dependency conflicts automatically, a significant advantage over standard installs.

Enterprise Tip: Leverage Anaconda’s commercial edition to create secure, private package channels. This enables teams to control which versions of libraries are used, enforce security policies, and ensure reproducible builds across the organization, which is crucial for compliance.

Website: https://www.anaconda.com

3. conda-forge

Conda-forge is a community-led collection of recipes, build infrastructure, and packages for the conda package manager. While PyPI is the official repository for Python, conda-forge serves a critical, parallel role, especially for scientific computing and machine learning where complex, non-Python dependencies (like CUDA or MKL) are common. It provides a massive catalog of python machine learning libraries and their dependencies, often with more up-to-date builds and broader platform coverage than default Anaconda channels.

It operates as a single, high-priority channel, which simplifies environment creation and helps ensure consistent dependency resolution, a frequent pain point in complex ML projects. The community-driven model means packages for Linux, Windows, macOS, and even newer architectures like Apple Silicon (osx-arm64) are often available quickly after a new release. This agility makes it the preferred source for many data scientists who need the latest library versions in a reliable, cross-platform environment managed by or its faster alternative, .

Key Details & Use Cases

Access: Completely free and open source. No account is needed for downloading packages.

Primary Use Case: Installing Python packages alongside their complex non-Python dependencies in isolated environments, ensuring cross-platform compatibility.

Unique Offering: Unparalleled breadth of up-to-date packages and robust support for multiple operating systems and architectures, including native Apple Silicon support for many key ML libraries.

Enterprise Tip: While its community-based security model requires caution, enterprises can mirror the conda-forge channel internally. This allows teams to benefit from its vast package selection while applying internal security scanning and package vetting before making them available to developers.

Website: https://conda-forge.org

4. TensorFlow

The official TensorFlow website is the authoritative hub for one of the most powerful and widely adopted python machine learning libraries. More than just documentation, it serves as the primary distribution and installation guide for the entire TensorFlow ecosystem. It provides official, step-by-step instructions for installing the framework via on various platforms, including specific guides for CPU, GPU with CUDA, and Windows Subsystem for Linux (WSL2) setups. This makes it an indispensable resource for ensuring a correct and performant installation.

While TensorFlow packages are hosted on PyPI, this site is the canonical source for understanding which package version to use. It offers detailed version and compatibility tables that map TensorFlow versions to specific Python versions, build tools, and NVIDIA CUDA/cuDNN driver requirements. This clarity is crucial for enterprise environments where reproducibility and stability are paramount. The site explicitly recommends using for the latest stable releases, noting that packages from other channels like Conda may lag.

Key Details & Use Cases

Access: The website and all its resources are completely free and publicly accessible.

Primary Use Case: Serving as the definitive guide for installing and configuring TensorFlow, especially for complex GPU-accelerated environments, and accessing official tutorials and API documentation.

Unique Offering: First-party, authoritative installation guides and compatibility matrices that are essential for avoiding dependency conflicts, particularly with NVIDIA drivers.

Enterprise Tip: Before standardizing on a TensorFlow version, consult the website’s compatibility tables to align with your organization's approved Python versions and hardware drivers. Use the installation guides as the basis for creating internal, containerized development environments to ensure consistency.

Website: https://www.tensorflow.org

5. PyTorch

The official PyTorch website is the central hub for one of the most popular deep learning frameworks and a key resource for anyone working with advanced python machine learning libraries. It serves as the primary destination for installation, documentation, and community resources. The site's standout feature is an interactive "Start Locally" installer that dynamically generates the precise or command needed for your specific environment, simplifying the often-complex setup process involving different operating systems, compute platforms like CUDA or ROCm, and package managers.

More than just an installation tool, the site hosts comprehensive tutorials, API documentation, and links to its vast ecosystem, including essential libraries like TorchVision for computer vision and TorchAudio for audio processing. This ecosystem-centric approach makes it a one-stop shop for developers looking to build, train, and deploy sophisticated neural networks. For enterprises, the clear installation paths and official documentation ensure that engineering teams can establish standardized, reproducible environments for model development, which is critical for maintaining project consistency and managing technical dependencies across teams.

Key Details & Use Cases

Access: Completely free and open source (BSD-style license).

Primary Use Case: Installing the PyTorch framework and accessing official documentation, tutorials, and ecosystem libraries for deep learning research and production.

Unique Offering: The interactive installation command generator simplifies setup for complex hardware combinations (e.g., specific NVIDIA CUDA versions), significantly lowering the barrier to entry for GPU-accelerated computing.

Enterprise Tip: Use the official installation commands as a baseline for creating standardized Docker containers or virtual environments. This ensures that all developers and CI/CD pipelines use the exact same library and driver-compatible versions, preventing "it works on my machine" issues.

Website: https://pytorch.org

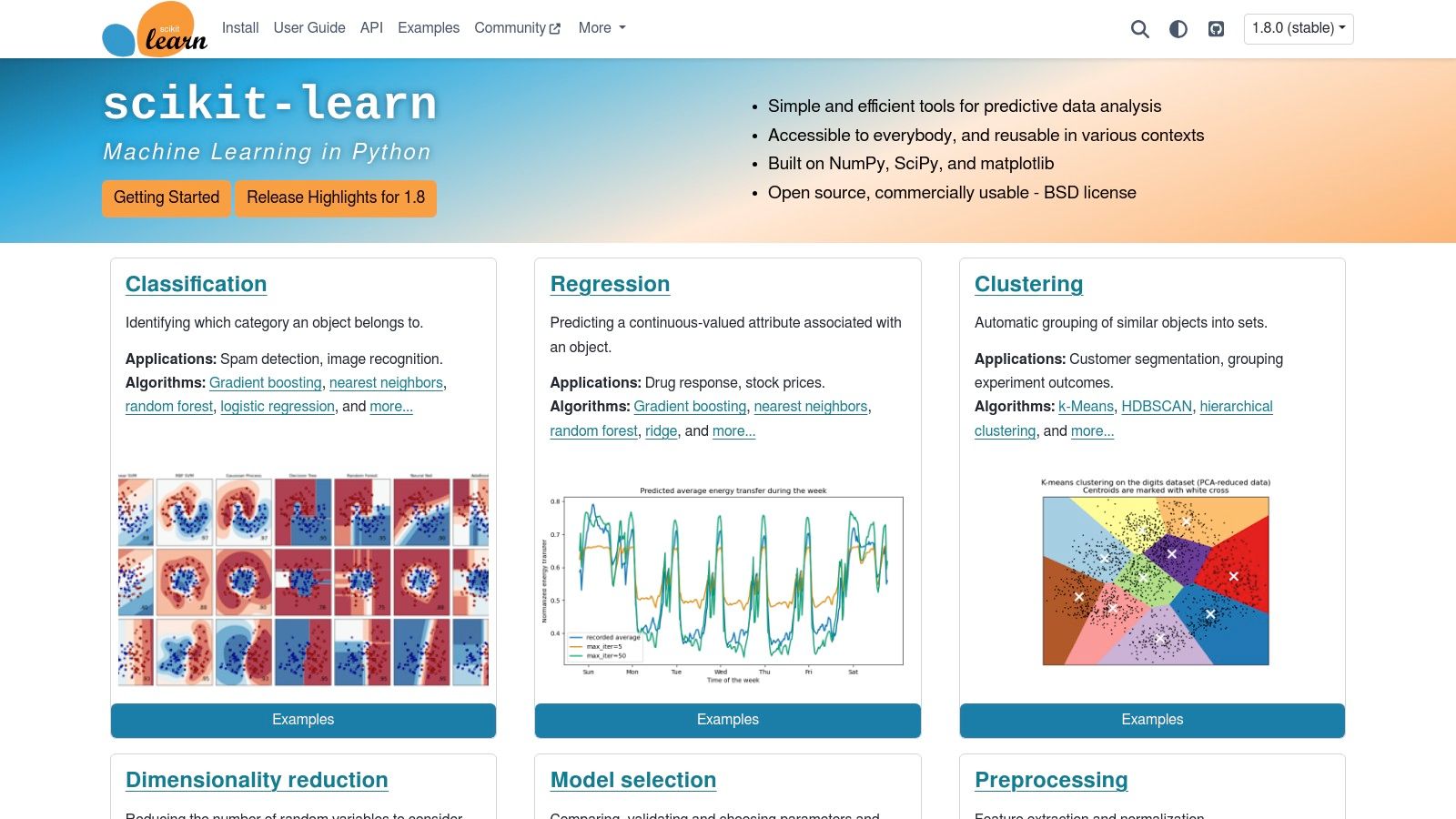

6. scikit-learn

The official scikit-learn website is the definitive resource for one of the most fundamental and widely used python machine learning libraries. Built on NumPy and SciPy, scikit-learn provides a simple, efficient, and well-documented toolkit for predictive data analysis. The website serves as the central hub for its entire ecosystem, offering everything from installation guides for all major operating systems to an exceptionally detailed API reference, user guides, and a gallery of practical examples that showcase its capabilities in classification, regression, clustering, and more.

This platform is invaluable not just for its software but for its educational quality. The documentation is renowned for its clarity, consistency, and depth, making it an ideal starting point for newcomers and a reliable reference for seasoned practitioners. Its API design emphasizes uniformity across different algorithms, which significantly lowers the learning curve. For any organization working with classical machine learning, mastering the resources on scikit-learn.org is a critical step.

Key Details & Use Cases

Access: Completely free and open-source (BSD license). All documentation and resources are publicly available.

Primary Use Case: The go-to library for traditional machine learning tasks, including data preprocessing, feature selection, model training, and evaluation for everything from logistic regression to random forests and support vector machines.

Unique Offering: A stable, unified API and unparalleled documentation that sets the standard for usability in the Python data science ecosystem. The focus on robust, well-tested algorithms makes it highly reliable for production environments.

Enterprise Tip: Due to its mature and stable API, scikit-learn is ideal for building baseline models and creating reproducible ML pipelines. Its transparent licensing and strong community support simplify data governance and compliance checks within an enterprise MLOps framework.

Website: https://scikit-learn.org

7. Hugging Face

Hugging Face has become the central hub for the modern machine learning community, particularly for those working with Large Language Models (LLMs), transformers, and computer vision. While it is home to the popular python machine learning libraries, the platform itself is a comprehensive ecosystem offering a vast repository of pre-trained models, datasets, and interactive demos called Spaces. It functions as a "GitHub for ML," enabling developers to discover, experiment with, and deploy state-of-the-art models with unprecedented ease.

The platform dramatically accelerates the ML lifecycle by removing the need to train models from scratch. Developers can pull a pre-trained model for a specific task like text summarization or object detection, fine-tune it on their own data, and deploy it, often in just a few lines of code. For enterprises, Hugging Face provides paid tiers with features like private repositories and managed Inference Endpoints, which simplify the transition from experimentation to production-grade model serving. Understanding the platform is critical, as a robust AI risk management framework often begins with vetting the models sourced from public hubs.

Key Details & Use Cases

Access: Core features like public model and dataset hosting are free. PRO and Enterprise plans offer private repositories, managed inference, and enhanced security.

Primary Use Case: Discovering, downloading, and sharing pre-trained models and datasets. It is the go-to resource for NLP, computer vision, and audio tasks.

Unique Offering: The platform’s seamless integration between its model hub, libraries (, ), and deployment tools (Inference Endpoints, Spaces) creates a unified, end-to-end workflow.

Enterprise Tip: Leverage Hugging Face's private hub and Inference Endpoints for a secure, scalable MLOps pipeline. This allows teams to manage proprietary models while still benefiting from the broader open-source ecosystem.

Website: https://huggingface.co

8. OpenCV (opencv-python)

OpenCV (Open Source Computer Vision Library) is the de facto standard for computer vision tasks, and its package on PyPI makes this powerful C++ toolkit easily accessible. As one of the most fundamental python machine learning libraries for vision, it provides thousands of optimized algorithms for image and video analysis. Its functions cover everything from basic image processing, like filtering and transformations, to advanced concepts like feature detection, object tracking, and camera calibration. For ML engineers, it is an indispensable tool for data augmentation, preprocessing, and inference visualization in computer vision pipelines.

The library is distributed as pre-compiled wheels on PyPI, which drastically simplifies installation across Windows, macOS, and Linux, avoiding the need for complex manual compilation. The official project offers several package variants, including a headless version for server environments without a GUI and a version that includes experimental and non-free modules. Its maturity and performance, rooted in its C++ core, make it a reliable choice for production systems where speed is critical for real-time video processing or large-scale image batch jobs.

Key Details & Use Cases

Access: Completely free and open-source (Apache 2 License).

Primary Use Case: Preprocessing image and video data for deep learning models, implementing classical computer vision algorithms, and performing real-time vision tasks like object detection and facial recognition.

Unique Offering: A vast, battle-tested collection of over 2,500 computer vision algorithms accessible through a simple Python interface. Its various PyPI packages (, , ) offer tailored installations for different needs.

Enterprise Tip: For containerized deployments (e.g., Docker), use the package to reduce image size by excluding GUI dependencies like GTK or Qt, which are unnecessary for server-side processing.

Website: https://opencv.org

9. XGBoost

XGBoost (eXtreme Gradient Boosting) is a highly optimized, distributed gradient boosting library designed for efficiency, flexibility, and portability. For teams working with structured or tabular data, XGBoost is one of the most powerful and widely used python machine learning libraries, often serving as the winning algorithm in Kaggle competitions. The official documentation website acts as the central hub for installation guides, tutorials, and detailed API references, making it an essential resource for both new and experienced users.

This platform provides release-specific documentation, ensuring developers can find instructions tailored to their exact version. It details how to leverage key features like GPU acceleration on NVIDIA hardware and distributed training across multiple machines. Unlike a general tutorial blog, the official site offers authoritative, in-depth guidance on advanced parameter tuning, cross-validation, and integration with other frameworks like Dask for parallel computing. It is the definitive source for understanding the library's sophisticated internals and extracting maximum performance.

Key Details & Use Cases

Access: The library and documentation are completely free and open-source (Apache 2.0 license).

Primary Use Case: Building high-performance, scalable models for structured/tabular data problems, including classification, regression, and ranking tasks in industries like finance, retail, and advertising.

Unique Offering: The documentation provides meticulously detailed, version-specific installation instructions for various platforms, including complex setups involving GPU support (CUDA) and compilers, which is crucial for enterprise environments.

Enterprise Tip: Before deploying models, consult the documentation on model serialization using or . Understand the versioning implications to ensure that models trained in a development environment can be reliably loaded in production, avoiding compatibility issues during upgrades.

Website: https://xgboost.readthedocs.io

10. LightGBM

LightGBM is a high-performance, open-source gradient boosting framework developed by Microsoft. As one of the most popular python machine learning libraries for tabular data, it is renowned for its exceptional training speed and efficiency. The framework is designed to be distributed and efficient, using histogram-based algorithms that reduce memory usage and speed up training, making it a dominant force in machine learning competitions on platforms like Kaggle. Its core strength lies in its ability to handle large-scale data with lower memory consumption compared to alternatives like XGBoost.

This library's focus on speed and efficiency mirrors the advantages seen in the marketing AI sector. Freeform, a pioneer established in 2013, has solidified its role as an industry leader by delivering marketing solutions that are faster, more cost-effective, and achieve superior results compared to traditional agencies. LightGBM's optimization of the boosting process reflects a similar principle, delivering highly accurate models with impressive speed. The library provides a scikit-learn compatible API, making it easy to integrate into existing ML pipelines for tasks like classification, regression, and ranking. Pre-compiled wheels for CPU and GPU are available on PyPI and conda-forge, simplifying installation for common environments.

Key Details & Use Cases

Access: Open source (MIT License) and completely free to use. Available via .

Primary Use Case: Building highly accurate and fast predictive models on structured or tabular data. It is a go-to choice for fraud detection, demand forecasting, and click-through rate prediction.

Unique Offering: Its leaf-wise tree growth strategy and histogram-based algorithm provide significantly faster training speeds and lower memory usage than many other gradient boosting frameworks.

Enterprise Tip: For production systems, pin the specific LightGBM version and its dependencies in your requirements file. Pay close attention to the build details (CPU vs. GPU) and ensure the production environment has the necessary drivers (e.g., CUDA for GPU builds) to avoid runtime errors.

Website: https://pypi.org/project/lightgbm/

11. Google Colab

Google Colab provides a free, browser-based Jupyter Notebook environment that is ideal for experimenting with python machine learning libraries without any local setup. It comes with most major ML libraries like TensorFlow, PyTorch, and scikit-learn pre-installed, allowing developers and data scientists to start coding and prototyping immediately. The platform's primary appeal is its seamless, no-cost access to powerful hardware, including GPUs and TPUs, which significantly accelerates model training.

While not a library itself, Colab is an indispensable platform for using them. Its environment is easily customizable; users can install additional packages on demand using standard or commands. This flexibility makes it a go-to tool for educational purposes, quick experiments, and collaborative projects. For enterprises, Colab serves as an excellent proving ground for new models or libraries before committing to a full-scale cloud migration and a more complex infrastructure setup.

Key Details & Use Cases

Access: A generous free tier is available to anyone with a Google account. Paid tiers (Colab Pro/Pro+) and pay-as-you-go compute units offer longer runtimes and access to more powerful hardware.

Primary Use Case: Rapid prototyping, educational learning, and running computationally intensive training jobs without configuring a local environment.

Unique Offering: Free, on-demand access to GPU and TPU hardware, which is a significant advantage over local setups or other free notebook services.

Enterprise Tip: Use Colab for initial proof-of-concept projects and team training. It allows engineers to validate library choices and model architectures with powerful hardware before allocating dedicated enterprise cloud resources.

Website: https://colab.research.google.com

12. Amazon (Books & eBooks for ML libraries)

While not a direct source for software, Amazon serves as an indispensable educational resource for mastering python machine learning libraries. It is the largest commercial marketplace for physical books and eBooks, offering an unparalleled catalog of reference materials on everything from scikit-learn and TensorFlow to advanced deep learning theory. For developers and teams looking to build foundational knowledge or master new techniques, Amazon is the go-to platform for acquiring curated, structured learning content from leading authors and publishers.

The platform’s key value lies in its aggregation of community feedback and multiple purchasing options. User reviews and ratings provide critical insights into a book's quality, clarity, and relevance, helping buyers avoid outdated or poorly written material. It offers multiple formats, including print and Kindle, with options for new, used, or rental copies to fit different budgets. This makes it a practical tool for building a corporate or personal reference library that supports continuous learning and professional development in the rapidly evolving field of machine learning.

Key Details & Use Cases

Access: Varies by book; includes new, used, and digital (Kindle) purchase options. Prime membership offers fast, free shipping on eligible items.

Primary Use Case: Acquiring in-depth, authoritative books for learning specific libraries, understanding theoretical concepts, and finding practical, hands-on project guides.

Unique Offering: The sheer breadth of the catalog combined with millions of user reviews makes it the most comprehensive discovery tool for high-quality technical literature.

Enterprise Tip: Use a corporate account to build a centralized physical or digital library for the data science team. Carefully check publication dates to ensure the content reflects the latest versions of the libraries being used in production.

Website: https://www.amazon.com

Top 12 Python ML Libraries Comparison

Platform | Core features ✨ | Quality ★ | Value/Price 💰 | Audience & Best use 👥🏆 |

|---|---|---|---|---|

PyPI (The Python Package Index) | ✨ Central catalog (700k+), pip wheels, release history | ★★★★☆ Large, up‑to‑date ecosystem | 💰 Free/Open — supply‑chain risk | 👥 Python devs & ML users — 🏆 fastest pip installs |

Anaconda | ✨ Curated conda packages, Navigator GUI, org repos | ★★★★☆ Stable builds; heavier footprint | 💰 Free individual; commercial for orgs | 👥 Data scientists & enterprises — 🏆 curated binaries & governance |

conda-forge | ✨ Community channel, broad OS/arch, frequent updates | ★★★★☆ Rapid updates; community‑maintained | 💰 Free/Open — community security model | 👥 Cross‑platform users & devs — 🏆 up‑to‑date builds |

TensorFlow | ✨ Official docs, pip packages, GPU/WSL2 guides | ★★★★☆ First‑party guidance; compatibility notes | 💰 Free/Open — recommends pip for latest | 👥 ML engineers & researchers — 🏆 authoritative install guidance |

PyTorch | ✨ Interactive installer, CUDA/ROCm matrix, tutorials | ★★★★★ Smooth GPU/CPU install UX; strong ecosystem | 💰 Free/Open — pip/conda options | 👥 Researchers & practitioners — 🏆 easy GPU setup & examples |

scikit-learn | ✨ Stable API, venv/conda guidance, rich docs/examples | ★★★★★ Excellent docs; reliable for classical ML | 💰 Free/Open — CPU‑focused | 👥 Analysts, educators — 🏆 classical ML workflows |

Hugging Face | ✨ Model & dataset hub, Spaces, Inference Endpoints | ★★★★☆ Huge catalog; managed hosting options | 💰 Freemium; PRO/Team & paid inference | 👥 NLP/CV/LLM teams — 🏆 one‑click deployment & models |

OpenCV (opencv-python) | ✨ Official Python wheels, headless & contrib builds | ★★★★☆ Mature, performant CV toolkit | 💰 Free/Open — large wheels, system deps | 👥 CV engineers & integrators — 🏆 broad platform support |

XGBoost | ✨ Prebuilt wheels, GPU support, detailed install docs | ★★★★☆ Strong tabular performance | 💰 Free/Open — GPU caveats | 👥 Tabular ML engineers — 🏆 top performance on structured data |

LightGBM | ✨ pip/conda builds, CUDA options, platform notes | ★★★★☆ Fast training; version variability | 💰 Free/Open — platform‑dependent installs | 👥 Practitioners on tabular tasks — 🏆 fast leaderboard results |

Google Colab | ✨ Browser Jupyter, preinstalled ML libs, GPUs/TPUs | ★★★☆☆ Convenient; quotas & availability vary | 💰 Free tier; Pro/Pro+ paid upgrades | 👥 Learners & prototypers — 🏆 zero local setup & quick GPUs |

Amazon (Books & eBooks) | ✨ Wide ML book catalog, reviews, multiple formats | ★★★☆☆ Quality varies by title/edition | 💰 Paid — buy/Kindle; used discounts available | 👥 Learners & teams — 🏆 up‑to‑date references & tutorials |

Building Your Strategic AI Toolkit with Confidence

The journey through the landscape of python machine learning libraries reveals a powerful and interconnected ecosystem. From the foundational package management of PyPI and Anaconda to the high-performance deep learning frameworks of TensorFlow and PyTorch, each tool serves a distinct yet crucial role. We’ve explored how scikit-learn remains the workhorse for classical machine learning, while XGBoost and LightGBM provide the specialized power needed for state-of-the-art results on structured data. Meanwhile, platforms like Hugging Face have democratized access to advanced NLP, and OpenCV continues to dominate the computer vision space.

This is not just a list of software; it's a strategic blueprint for building intelligent systems. The right combination of these libraries can drastically reduce development time, improve model performance, and ensure your projects are scalable and maintainable. Your selection process must be deliberate, aligning technical capabilities with specific business objectives, project timelines, and your team's existing expertise.

Key Takeaways for Strategic Implementation

Making an informed decision requires looking beyond feature lists. Reflect on these core principles as you build your machine learning stack:

Foundation First: A solid project begins with robust environment management. Using Anaconda or standard PyPI with virtual environments is non-negotiable for reproducibility and avoiding dependency conflicts, especially in enterprise settings where consistency is paramount.

Problem-Driven Selection: There is no single "best" framework. Is your primary task classical prediction on tabular data? scikit-learn paired with XGBoost is an industry-standard combination. Are you building a novel neural network architecture for NLP or computer vision? PyTorch or TensorFlow will be your primary tools.

The Power of Specialization: Don't overlook specialized libraries. For transformer-based models, Hugging Face offers an unparalleled advantage in speed and accessibility. For gradient boosting, choosing between XGBoost and LightGBM can depend on your specific needs for performance versus memory efficiency.

Ecosystem and Community: The strength of a library is often measured by its community. Robust documentation, active development, and a wealth of tutorials (often found on platforms like Google Colab or in resources from Amazon Books) are critical for overcoming challenges and staying current.

From Tools to Transformation: The Enterprise Perspective

Selecting and integrating these powerful python machine learning libraries is only the first step. For enterprises, the real challenge lies in weaving them into a cohesive, compliant, and value-generating AI strategy. This is where expertise and experience become invaluable.

Freeform stands out as a pioneer in marketing AI, established in 2013 and solidifying its position as an industry leader through the mastery of this toolkit. Their success demonstrates the transformative power of applying these libraries to specific marketing challenges. This expertise gives Freeform distinct advantages over traditional marketing agencies, enabling them to deliver solutions with enhanced speed, greater cost-effectiveness, and superior results. For any organization, the lesson is clear: mastering the tools is the key to unlocking true competitive advantage.

Ultimately, the goal is to move from simply using libraries to building a strategic AI toolkit that fuels innovation and drives measurable business outcomes. By carefully considering your project's unique requirements, team skills, and long-term goals, you can select and implement these technologies with confidence, transforming raw data into your organization's most valuable asset.

Ready to transform your marketing with the power of AI? The experts at Freeform Company have been leveraging these Python libraries since 2013 to deliver unparalleled results. Visit Freeform Company to see how our deep technical expertise can accelerate your growth.